In 2026, media organizations face a growing trust crisis due to the rise of synthetic media and "information collapse." Collaborative AI frameworks offer solutions by verifying content authenticity, ensuring secure data management, and promoting cooperation between AI developers and media companies. Three key frameworks address these challenges:

- Thomson Foundation's AI Value Framework: A four-stage guide for newsrooms to gradually adopt AI, focusing on workflow integration and operational efficiency. Example: South African newsroom Briefly News increased article output by 122% using AI tools.

- Partnership on AI (PAI) Human-AI Collaboration Framework: Focuses on ethical AI use with principles like consent, transparency, and disclosure. It includes tools for labeling and tracing AI-generated content. Example: Code for Africa combated misinformation during South Africa’s elections using PAI guidelines.

- SmythOS Platform: A technical solution for automating content production using AI agents and integrating tools across platforms. It simplifies workflows with a drag-and-drop interface and secure data sharing.

Each framework offers distinct strengths for improving efficiency, ethical standards, or technical capabilities in media operations. Selecting the right framework depends on your newsroom's priorities, resources, and readiness for AI integration.

Decision theoretic foundations for human-AI collaboration

sbb-itb-a759a2a

1. Thomson Foundation's AI Value Framework

The Thomson Foundation's AI Value Framework provides newsrooms with a structured guide to move from experimenting with AI to fully integrating it into their operations. Created alongside media partners in the Western Balkans, this framework lays out a four-stage process that addresses both the technical and organizational aspects of AI adoption. Its design not only simplifies the transition to AI but also encourages collaboration between editorial and technical teams, aligning with broader initiatives to improve data sharing within the media sector.

Stages/Phases

The framework is divided into four key stages:

- Stage 1: Awareness – This stage focuses on introducing AI concepts to staff through workshops and informal sessions like "lunch and learn." Employees get familiar with basic AI tools for writing and blogging, such as text editors and translation software, while learning prompting techniques to reduce resistance to AI adoption.

- Stage 2: Activation – Here, newsrooms pilot specific AI use cases, such as recommendation engines or automated fact-checking tools. "AI Ambassadors" are identified to encourage experimentation and promote AI initiatives across various departments.

- Stage 3: Integration – AI becomes part of the newsroom's core processes, with tools embedded into content management systems and production workflows. Close collaboration between journalists and AI teams ensures that AI models are tailored to the newsroom's specific needs.

- Stage 4: Operation – At this stage, AI is institutionalized through formal governance policies, clear data privacy standards, and the establishment of leadership roles like Chief AI Officer.

This step-by-step approach allows newsrooms to adopt AI gradually while ensuring its effective integration into everyday operations.

Media Applicability

Real-world examples highlight the framework's potential. In December 2025, South African newsroom Briefly News introduced "Editorial Eye", an AI proofreading tool led by Director Rianette Cluley. This tool increased daily article output from 90 to 200 and boosted page views by 22% over six months. Similarly, Mail & Guardian implemented an automated sub-editing tool between August and December 2025. This tool cut the time needed for headline generation and hashtag suggestions in half, all while keeping human oversight intact.

"The sub-editing [function] is basically marking your homework effectively. It's used as a tool that is backstopped by human intervention the whole way along."

– Douglas White, Circulation and Subscription Officer, Mail & Guardian

These examples showcase how AI can lead to measurable improvements in newsroom efficiency and output.

Implementation Ease

One of the framework's standout features is its encouragement to "start small." Newsrooms are advised to begin with low-risk projects, like summarizing transcripts or translating interviews, before tackling more complex AI applications. For instance, the Pondoland Times adopted AI auto-posting (often managed via AI blog generators) and an AI-generated news flash avatar in late 2025. This move increased monthly impressions from 2.4 million to 10 million and generated an additional 20,000 ZAR (around $1,100) in revenue within just one month.

The framework also emphasizes rapid prototyping and agile methods, allowing newsrooms to experiment and adapt quickly while keeping costs under control. This approach ensures that even smaller media organizations can explore AI without overcommitting resources upfront.

2. Partnership on AI (PAI) Human-AI Collaboration Framework

The Partnership on AI (PAI) framework emphasizes ethical responsibility and transparency in managing AI-generated content. Created with input from over 100 stakeholders worldwide, it offers media organizations a set of guidelines to handle synthetic media while maintaining public trust. At its launch, 18 organizations, including major broadcasters like the BBC and CBC/Radio-Canada, signed on as inaugural partners. This framework adds an ethical layer to the technical and practical strategies, such as using advanced AI tools for content creation, outlined in other standards.

Ethical Focus

The framework is built on three core principles: Consent, Disclosure, and Transparency. It categorizes stakeholders into three main groups - Builders, Creators, and Distributors/Publishers - each with specific responsibilities. For media organizations, this means implementing informed consent protocols for individuals featured in AI-generated content, even if prior consent was already obtained. The framework is designed to evolve, with a Community of Practice where partners share annual case studies to test and refine the guidelines in real-world scenarios.

"The burden to interpret generative AI content should not be placed on audiences themselves, but on the institutions building, creating, and distributing content." – Code for Africa

Data Sharing Mechanisms

To ensure transparency, PAI recommends a two-tiered approach to data sharing:

- Direct Disclosure: Visible indicators like labels, watermarks, and disclaimers that audiences can easily identify.

- Indirect Disclosure: Cryptographic provenance through the C2PA standard, embedding metadata to enhance traceability.

For AI developers, the framework also requires a detailed "key ingredient list." This includes information on compute resources, parameters, architecture, and training data methods, enabling independent safety evaluations.

Media Applicability

The framework’s impact is evident in real-world applications. For example, in May 2024, just before South Africa’s general elections, Code for Africa analyzed an AI-generated video of a burning flag distributed by a political party. By adhering to PAI guidelines, the organization demanded full disclosure and updated journalistic policies to combat misinformation. Google has also integrated the framework into its platforms like YouTube, Search, and Google Ads, introducing features such as "About this image" to provide context beyond basic labels. Similarly, Meedan applied the framework’s disclosure recommendations through its open-source tool, Check, to identify and counter synthetic content in South Asia.

"Establishing principles for the responsible use of synthetic media has enormous value as many organisations grapple with its implications." – Jatin Aythora, Director of Research and Development, BBC

Implementation Ease

The framework offers flexibility by distinguishing between Baseline Practices and Recommended Practices, allowing organizations to scale their efforts based on available resources. It encourages media organizations to start by publishing a clear, accessible policy on synthetic media and gradually adopt C2PA standards for content provenance. If harmful synthetic content is unintentionally distributed, the framework advises immediate corrective actions, such as labeling, downranking, or removing the content. This adaptable approach ensures organizations of all sizes can implement the framework effectively.

3. SmythOS Platform for AI-Media Integration

SmythOS focuses on streamlining operations for media organizations by enabling them to create autonomous AI agents for content production and distribution across multiple channels. With integrations spanning over 300,000 apps, APIs, and data sources, it provides the technical backbone for smooth collaboration between AI developers and media teams. Unlike platforms that prioritize ethical oversight or gradual adoption, SmythOS zeroes in on optimizing workflows for immediate results.

Data Sharing Mechanisms

At the heart of SmythOS is the Data Pool, a centralized repository where teams can upload files or text. These inputs are then indexed into searchable embeddings, allowing AI agents to leverage retrieval-augmented generation (RAG) to produce content grounded in the organization’s knowledge base. Alongside this, the SmythOS Vault ensures secure storage of API keys and integration tokens with encryption. This feature enables teams to share credentials for platforms like Medium or WordPress safely, without exposing sensitive information in code. For better project management, Collaborative Spaces allow work to be divided into distinct environments with role-based access, keeping various media projects or client data neatly separated.

"The Data Pool is the central interface for managing indexed data in SmythOS... This enables agents to use retrieval-augmented generation (RAG) and answer queries using relevant context from your documents." – SmythOS Documentation

Media Applicability

SmythOS extends its capabilities to practical uses for media organizations. Through integrations with tools like Replicate and Hugging Face, teams can generate multi-modal content. For example, they can create AI-generated music from text prompts, produce images using Stable Diffusion, or process video content via FFmpeg. The platform also simplifies workflows by connecting tools like Google Docs with publishing platforms such as WordPress, automating content transfer and formatting.

Implementation Ease

Staying true to its goal of promoting collaboration, SmythOS includes a visual workflow canvas with a drag-and-drop interface. This feature enables journalists and editors to design AI processes without needing coding expertise. A library of over 40 pre-built components allows for quick development of AI agents. To further simplify deployment, the platform includes real-time testing tools like the "Chat with Agent" feature and visual debugging options, making it easier to refine an agent’s behavior before it goes live.

"Through collaborative intelligence, AI can complement humans, not replace them. Collaborative intelligence empowers teams to achieve goals that might have been out of reach without the help of AI." – SmythOS

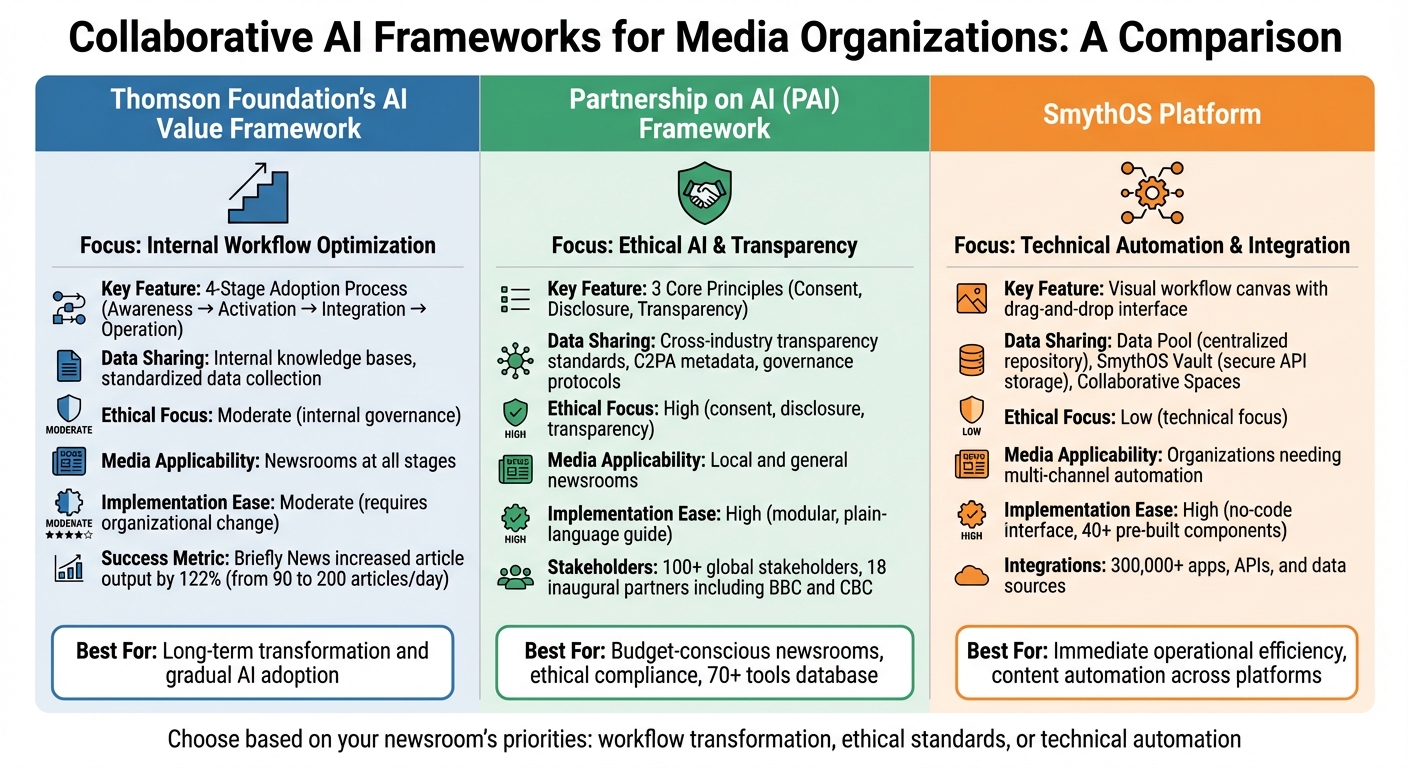

Comparison of Framework Strengths and Weaknesses

Comparison of Three AI Frameworks for Media Organizations

Every framework brings its own strengths to the table, shaping its effectiveness for media organizations. The Thomson Foundation's AI Value Framework focuses on improving internal newsroom workflows. It achieves this by creating shared knowledge bases and standardizing data collection during its Integration stage. However, its scope is mostly confined to internal newsroom management.

On the other hand, the Partnership on AI Framework shines in its ethical approach, addressing critical areas like consent, transparency, and disclosure. This approach is increasingly common in AI-powered news apps that prioritize ethical content delivery. Developed with input from over 100 global stakeholders, it features a 10-step adoption guide that is modular, allowing newsrooms to implement it in phases based on their readiness. The use of plain-language guidelines makes it approachable for non-technical staff and encourages leveraging existing tools.

Here’s a quick comparison of their key strengths and limitations:

| Framework | Data Sharing Mechanisms | Ethical Focus (Key Aspects) | Media Applicability | Implementation Ease |

|---|---|---|---|---|

| Thomson Foundation | Internal knowledge bases, standardized data collection | Moderate (internal governance) | Newsrooms at all stages | Moderate (requires organizational change) |

| Partnership on AI | Cross-industry transparency standards, governance protocols | High (consent, disclosure, transparency) | Local and general newsrooms | High (modular, plain-language guide) |

The Partnership on AI Framework also benefits from its Steering Committee, which includes nine experts from industry, newsrooms, and academia. This diverse expertise strengthens its practical relevance, further highlighting the importance of using frameworks tailored to the unique needs of media organizations for effective AI integration.

Conclusion

Addressing the digital trust crisis requires careful selection of an AI framework that aligns with your newsroom's needs. For budget-conscious newsrooms, the Partnership on AI Framework is a practical starting point. It offers a sortable database of over 70 tools, organized by cost and expertise requirements. For example, in November 2023, El Vocero de Puerto Rico used this framework to implement an automated Spanish translation system for National Hurricane Center alerts. By integrating the DeepL API, they were able to quickly publish critical alerts in Spanish.

For organizations aiming for long-term transformation, the Thomson Foundation's AI Value Framework outlines a clear path through four maturity stages. Starting with Stage 1: Awareness, teams can run low-risk pilot projects, such as using AI writer tools for summarizing transcripts or extracting metadata. These initiatives build momentum without requiring significant investment. A regional news agency successfully applied this strategy, processing 450 articles in just 35 minutes with local AI, significantly reducing their workload.

The key is to match the framework to your newsroom's current priorities. For under-resourced teams, focusing on tasks like metadata extraction or SEO content classification can yield immediate efficiency gains without compromising editorial control. As Renee Richardson, Managing Editor of the Brainerd Dispatch, shared after adopting an AI-powered Public Safety Reporting System in November 2023:

"Time is the one thing we typically want more of in order to do all the things on our work lists. And it's the one thing that seems to slip away with so many demands from all sides in a modern newsroom."

While AI can enhance efficiency, human oversight remains vital. Set performance benchmarks, carefully review training data, assess risks, and establish criteria for retiring outdated tools. Additionally, consider on-device AI solutions for high-volume tasks to avoid recurring cloud API fees - processing 10,000 articles locally costs the same as processing 100. A thoughtful, context-sensitive approach ensures operational efficiency while maintaining editorial integrity.

FAQs

Which framework should my newsroom start with?

For newsrooms stepping into the world of AI, Lukas Görög's "AI value framework" offers a clear roadmap. It breaks the process into four stages: Awareness, Activation, Integration, and Operation. These steps are designed to help organizations adopt AI in a way that's both ethical and effective. Additionally, the Partnership on AI provides a detailed 10-step guide that emphasizes ethics and risk management. By combining these frameworks, newsrooms can implement AI responsibly, ensuring it aligns with editorial principles while tackling both operational and ethical hurdles.

How can we prove AI content is authentic?

To establish trust in AI-generated content, content provenance systems like the Coalition for Content Provenance and Authenticity (C2PA) play a key role. These systems work by attaching tamper-proof credentials - such as cryptographic hashes - to digital assets. This process tracks the content's origin and any edits made along the way.

Additionally, open-source tools make it easier to integrate these credentials into various platforms, promoting transparency. By using these systems, creators can ensure their content remains trustworthy, even as AI-generated media becomes increasingly difficult to differentiate from original creations.

What policies should we set for synthetic media?

Policies surrounding synthetic media need to focus on responsible practices, openness, and minimizing harm. Organizations like the Partnership on AI suggest actionable steps such as clearly labeling AI-generated content, involving communities in decision-making, and updating policies to keep pace with technological advancements. Ethical guidelines should tackle challenges like non-consensual deepfakes and the spread of false information. The goal is to promote clarity, accountability, and public trust while staying consistent with current digital media laws.